Menu

close

Trigger warning: This page addresses digital sexualized violence..

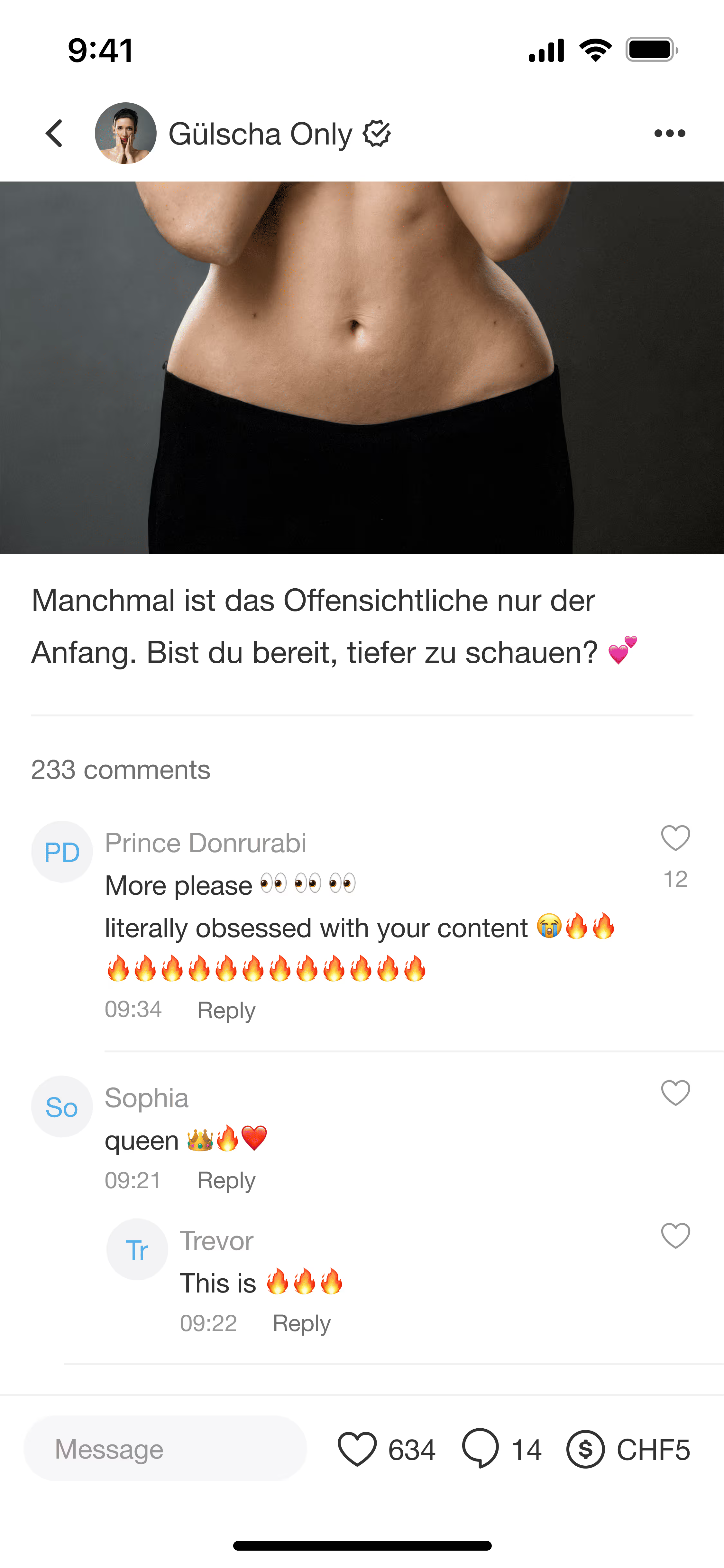

Imagine this: You come across Gülsha Adilji – or even yourself – on a platform like OnlyFans. The face is familiar, yet not a single image is real.

With this deceptively realistic mock-up account, we show what happens online every single day – often without the person’s knowledge. We want to highlight how easily intimate deepfakes can be created today, and how quickly they can destroy someone’s reputation, privacy, and sense of security. At the same time, we present possible ways to protect yourself.

The topless photos were created by AI artist Basil Stücheli using artificial intelligence.

Here, Basil shows how a deceptively realistic fake photo is created from the original image from the Ringier archive by Geri Born:

One third of all young people have already encountered sextortion-like content.

(Source: clickandstop.ch, 2024, CH context)

80% of victims do not come forward due to fear/shame.

(Source: Europol, IOCTA 2023, EU context)

AI can create deceptively realistic fake nudes from a single selfie.

(Source: Sensity AI, 2023, “State of Deepfakes”)

98% of all deepfake videos on the internet are pornographic.

(Source: Sensity AI, 2023, “State of Deepfakes”)

Welcome to WHAT THE FAKE — learn how to protect yourself on clickandstop.ch.

In cooperation with Child Protection Switzerland & clickandstop.ch

Important note: campaign staging · not a real account.

The campaign is supported by Kinderschutz Schweiz and Ringier. Gülsha Adilji is aware of and supports the initiative.